tl;dr: AI is now a practical, high-value capability for quality and compliance teams. Properly applied, it reduces blind spots, accelerates triage, raises the signal-to-noise ratio in CAPA workstreams, and helps teams surface the small but material patterns that predict regulatory scrutiny. AI is not a substitute for technical judgment or governance; it is an accelerant for focused investigation, risk scoring, and pre-inspection readiness. Key recent trends include widespread enterprise AI use, regulators deploying agency-wide AI tools, and the rapid expansion of the life-sciences AI market.

Why advanced compliance teams should treat AI as infrastructure, not a toy

For advanced QA/regulatory operations, AI must be treated as production-grade infrastructure. That means the AI capability is versioned, validated, subject to access controls, integrated into SOPs, and governed by metrics. When this is done, AI shifts compliance work in three ways: it converts forensic, manual tasks into reproducible queries; it signals recurring process failures earlier than human review; and it enables scalable, prioritized remediation planning across global networks of sites and suppliers. When done poorly, ad hoc models, undocumented prompts, or unsafe data handling, AI adds risk rather than removes it.

Recent context that makes AI adoption urgent for quality teams

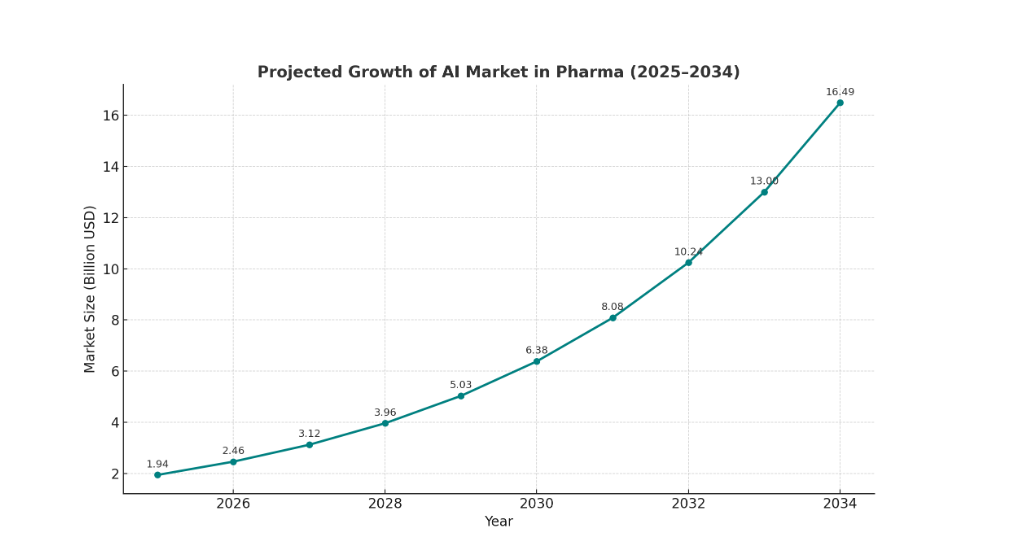

Enterprise adoption of AI jumped materially in 2024–25; multiple major surveys show that a large majority of organizations are using AI in at least one area of the business, creating both opportunities and expectations for modern tooling in regulated functions. The FDA itself launched an agency-wide generative AI capability in 2025, signaling that regulator workflows will increasingly be data-driven and AI-assisted. The market for AI in pharma and biotech is expanding, with several analyst providers estimating market sizes in the low-to-mid billions for 2025 and projecting high double-digit compound annual growth through the decade. These facts change the playing field: regulators and commercial partners will expect faster, evidence-driven responses, and inspectors may focus on signals that AI tools surface.

Core AI capabilities that materially reduce inspection risk

- Pattern detection across decentralized evidence

Advanced models ingest thousands of textual artifacts, Form 483s, warning letters, audit reports, CAPA histories, batch records, and supplier files, and return clusters of recurring observations. For example, rather than treating three identical lab deviations as separate incidents, AI groups them by root contributors (instrument validation, analyst training, environmental monitoring) and quantifies recurrence. That grouping drives prioritized CAPAs rather than firefighting discrete events. - Risk scoring and prioritization for CAPA portfolios

AI can produce a reproducible risk score for each CAPA by combining severity (safety/quality impact), recurrence, time-open, and business exposure (revenue or patient population). The result is a sortable remediation backlog where a small number of CAPAs account for most regulatory exposure. - Automated submission and document checks

Advanced natural language routines detect internal inconsistencies (mismatched batch numbers, date conflicts, missing signatures) that typically trigger inspector follow-ups. These checks are applied to responses to regulators, deviation investigation files, and labeling dossiers; removing these trivial but time-consuming errors materially lowers the chance of follow-up inspection triggers. - Inspector and district intelligence

By analyzing prior inspection outcomes by district, by inspector, and by product class, AI can produce a short “inspector brief” summarizing typical focus areas, recent observations, and the kind of evidence that has closed similar findings. This reduces rehearsal time and focuses documentary bundles for the inspection day. - Supplier and network risk analytics

AI combines audit data, complaint patterns, recall history, and shipment anomalies to create supplier risk heatmaps. For companies with multi-tier supply chains, this is the only practical way to spot upstream signals that eventually appear as finished product deviations.

How AI changes the production of evidence and auditability

Advanced compliance teams treat AI outputs as an investigative starting point, not as the final record. Each AI flag must have a traceable provenance: the document(s) that produced the insight, the model version and parameters used, the timestamp, and the reviewer who accepted or rejected the finding. This level of traceability turns AI into a reproducible amplifier of audit evidence rather than an opaque “black box.” Good engineering practice is to log queries and output hashes so that regulators or internal auditors can reconstruct both the input and the human decisions that followed.

Concrete KPIs for measuring AI value in compliance

Time to triage: median hours/days saved per inspection readiness review.

False positive ratio: percent of AI flags that require no action after SME review.

Action concentration: percent of total regulatory exposure captured by the top 10% of CAPAs ranked by AI.

Repeat finding reduction: year-over-year decline in identical Form 483 observations attributed to AI-identified remediation.

Remediation velocity: time from AI flag to CAPA initiation and closure.

Implementation roadmap for advanced teams, governance first

Phase 0 — Data governance and inventory

Start by creating a catalog of all sources, like inspection reports, Form 483s, warning letters, batch records, digitized lab notebooks, OOS/OOT investigations, supplier audits, complaint logs, and CAPA files. Make sure to define consistent site and product names and normalize dates and identifiers, because without this, AI outputs can be unreliable. Tools like Atlas make this process easier by organizing and standardizing your data so it’s ready for analysis

Phase 1 — Pilot a high-value use case with a rigorous validation plan

Pick one problem: e.g., trend detection in Form 483s for a product family. Define success metrics (precision, recall, time saved) and a validation protocol where SMEs label model outputs for a defined period. Use the pilot to tune thresholds and to shape the human review playbook.

Phase 2 — Operationalize with audit trails and SOPs

Document model versioning, access control, approval gates, and SOPs that show how AI outputs convert into CAPA tickets, training, or corrective measures. Integrate AI outputs into existing QMS (electronic CAPA systems) rather than creating parallel trackers.

Phase 3 — Scale and integrate into inspection readiness cycles

Make AI a standing input to management reviews, internal audit planning, supplier oversight, and pre-inspection drills. Use AI dashboards during mock inspections to guide evidence bundles and to rehearse inspector questions.

Model risk, validation, and regulatory readiness

Model validation for compliance uses the same rigor as other regulated computational tools. Validation activities must show that model outputs are reproducible, stable over expected data drift, and suitable for the intended use. Establish a periodic retrain and revalidate cadence based on data volume and change in regulatory focus. Maintain a “holdout” dataset of past inspections to measure backward-looking performance after each model update.

Key governance artifacts to maintain

A validation protocol document; an SOP for AI-driven decision workflows; a register of model versions and retrain dates; a security and privacy risk assessment (data access logs, vendor controls); and human review logs documenting SME acceptance or rejection of each material AI finding.

Human factors and change management for QA teams

Adoption fails when SMEs see AI as a threat or a checkbox. Successful adoption emphasizes augmentation: AI reduces repetitive reading so SMEs can focus on root cause analysis, practical remediation, and process redesign. Invest in role redesign — triage analysts, AI reviewers, and remediation owners — and track trust metrics so the organization knows when AI is delivering actionable intelligence versus noise.

Operational risks and mitigation strategies

Data quality: Poorly digitized or inconsistent records produce unreliable outputs. Mitigation: start with the highest quality source sets and progressively bring more variable data into scope after cleaning.

Overreliance: Treating AI outputs as final increases regulatory risk. Mitigation: require documented SME sign-off for any AI-initiated change.

Vendor and IP risk: Third-party platforms that ingest proprietary data create exposure. Mitigation: contractual controls, encryption, on-premise or private cloud deployment, and data minimization.

Security: AI tooling increases the attack surface. Mitigation: role-based access, endpoint controls, and periodic penetration testing.

Recent industry signals and numbers that matter to decision makers

A majority of organizations reported AI use across at least one function in 2024–25, indicating rapid normalization of AI in enterprise operations. The FDA deployed an internal, agency-wide generative AI tool in 2025 to optimize workflows across scientific review and inspection support; this means inspectors will increasingly have synthetic briefings that summarize risk themes, potentially shortening the time from flag to inspection. Market estimates for AI in life sciences vary, with multiple analyst houses reporting multi-billion dollar totals for 2025 and aggressive growth projections through 2030; the exact numbers differ by scope, but the direction is consistent: significant, sustained investment. These signals matter because they indicate both the expectation of AI-driven capability from counterparties and the competitive imperative to modernize QA tooling.

Practical architecture patterns for compliant AI deployment

Hybrid on-prem + cloud inference: store sensitive documents on-prem and perform model inference in a secure cloud enclave with controlled I/O. This balances scalability with data protection.

Model orchestration and retrain pipelines: implement CI/CD for models (pipeline for new data ingestion, retrain, validation, and deployment) with automated tests for precision/recall on holdout sets.

Explainability layers: include a lightweight explainability engine that returns the top contributing phrases, document links, and confidence scores for every flag. This materially accelerates SME review.

Integration with QMS: push AI-flagged items into the electronic CAPA system with metadata (source doc, confidence score, reviewer) so that AI becomes traceable within standard workflows.

Vendor selection checklist for advanced teams

Data scope: how large and varied is the vendor’s document corpus, and can it ingest proprietary archives?

Latency and alerting: Does the platform provide near-real-time alerts for new regulatory postings (Forms 483, warning letters)?

Explainability: Can the tool show provenance for each finding and give a human-consumable rationale?

Validation support: Does the vendor provide tools and raw outputs to support your independent validation activities?

Deployment options: on-prem, private cloud, or validated SaaS with encryption in transit and at rest?

Audit and compliance features: Are logs immutable, and does the tool support exportable audit trails?

Total cost of ownership: consider onboarding, data cleaning, retrain costs, and SME time to validate outputs.

Examples of measurable outcomes from production deployments (typical ranges reported by adopters)

50–80% reduction in document review time for pre-inspection packet preparation.

40–70% reduction in the time to identify recurring root causes across audit populations.

20–40% reduction in repeat Form 483 observations when AI-identified patterns are remediated and tracked. These numbers vary by program maturity and starting data hygiene, but they reflect realistic outcomes for disciplined pilots and proper governance.

How to structure an AI-driven pre-inspection playbook

Daily monitoring: automated ingestion of new regulatory postings and internal deviations, with a short list of high-priority flags.

Weekly drill: AI-generated site brief for any upcoming inspection that summarizes five recurring risks, top 10 CAPAs by impact, and evidence bundles mapped to each expected question.

Sprint remediation: a two-week remediation sprint to close top X issues identified by AI and validated by SMEs, with a checklist for documentation and training.

Inspection day: AI-packaged evidence binder with sectioned documents and an “inspector brief” prepared for the team lead.

Post-inspection review: use the inspection record to retrain models and reduce false positive noise in future cycles.

Regulatory and ethical considerations

Document and defend how you use AI in regulated decision-making. Ensure your SOPs explain model’s purpose and human oversight. If AI outputs influence regulatory responses (e.g., the content of an official reply), the validation and traceability requirements are higher. Maintain an ethics register covering bias risks (e.g., over-focusing on one site because of data volume) and ensure your program does not inadvertently deprioritize patient safety concerns.

Conclusion — what advanced QA teams must decide next

AI is not optional for teams that want to reduce surprise and manage inspection risk at scale. The choices are practical: treat AI as a governed, validated, repeatable capability; make initial pilots conservative and measurable; invest in data hygiene and integration with existing QMS; and build human review into every critical decision. When these steps are followed, AI becomes an amplifier of expert judgment, not a replacement. Teams that move deliberately will shorten inspection prep cycles, improve CAPA effectiveness, and reduce the probability of repeat findings.