tl;dr: Data silos slow life sciences R&D, causing inefficiencies and errors. The Model Context Protocol (MCP) enables AI systems to access and integrate data across departments securely, breaking silos, streamlining workflows, and accelerating research and decision-making. Early adoption of MCP is essential for faster, smarter, and more collaborative R&D.

The Challenge of Data Silos in Life Sciences R&D

Life sciences companies accumulate vast amounts of data from labs, clinical trials, and operations, but much of it remains locked away in departmental silos. Scientists often describe it as if multiple chefs share a kitchen, but each has their own pantry and recipe book, with no common way to share ingredients or results. The result is slow, error-prone R&D: teams can’t easily find or trust all relevant information, so decisions are made on incomplete data and efforts are often duplicated or wasted. In one telling anecdote, a researcher wasted months on an experiment because a colleague on his own floor had already done the same work without his knowledge.

Many experts note that this problem is widespread. A recent survey found 59% of pharma R&D professionals still cite siloed data as a major barrier. When teams can’t easily share information, decision-makers face “blind spots” that lead to errors and wasted resources. Breaking down these barriers is essential: unified access to data can accelerate discovery, reduce duplicative work, and unleash AI tools effectively across the organization.

What Is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open standard (introduced by Anthropic in 2024) that lets AI systems connect on-the-fly to external data sources and tools. You can think of it as the “new HTTP” for AI agents: it provides a single, uniform way for AI assistants to retrieve data. In practical terms, any database, instrument, or software can publish an MCP “endpoint,” and any AI model (like ChatGPT, Claude, or a custom model) can discover and call it. For example, one analyst described MCP as essentially giving AI the ability to “browse” databases and software, just as web browsers use HTTP to browse the internet.

Under the hood, an MCP server exposes its capabilities (for example, functions to search an electronic lab notebook or retrieve patient records) in a self-describing format. An AI client first asks the server, “What can you do?” and the server lists available tools (function names, inputs, outputs). The AI then selects the appropriate function and calls it with the necessary parameters. This enables the model to dynamically integrate new data sources without requiring custom code. Industry experts emphasize that MCP is designed for security and governance: it provides a “standardized way for AI systems… to connect with external data sources and services in a secure, controlled… manner”. In short, MCP transforms AI assistants from passive Q&A systems into active agents that can query and manipulate your data systems in a controlled way.

How MCP Works (Simply Explained)

Under the hood, MCP follows a client–server model. The AI application acts as the MCP client. It sends a request to an MCP server (which is a wrapped data source) asking, “What can you do?” The server replies with a list of tools or functions, each described by its name, expected inputs, and outputs. For example, the list might include functions like search_experiments(term, date_range) or get_patient_records(id).

Once the AI sees these options, it chooses the right tool for the task. Suppose a scientist asks, “What clinical trials involve drug X?” The AI might decide to call the server’s search_trials(drug=X) function. The server runs that query and returns structured results (often as JSON). The AI then uses the returned data to form its answer. In other words, the AI doesn’t just guess or rely on outdated training data; it directly invokes your live data systems and uses the exact data and logic you’ve provided.

Figure: A schematic of how the Model Context Protocol (MCP) connects an AI host to multiple data servers. Each MCP server wraps a specific resource (on-premise or cloud) and exposes it through a standard interface.

Compared to traditional APIs, MCP is more flexible. If a data provider updates its tools (for example, adds a new query parameter), an MCP server simply advertises the new options on the next client request. The AI adapts automatically without code changes. This dynamic approach lets AI assistants tap fresh data and tools as soon as they are available, greatly speeding up development. In sum, MCP turns any data service into an AI-ready extension: models can treat your data functions as if they were part of their own “brain,” calling them as needed.

At the same time, another class of specialized tools is gaining traction in the life sciences sector. Atlas Compliance, for instance, is increasingly relied upon by leading pharmaceutical companies because of its large database of FDA Form 483s, warning letters, inspection histories, and supply chain monitoring records. What makes it stand out is the addition of an AI Copilot and advanced features that help quality and compliance teams detect risks earlier, benchmark against peers, and prepare for inspections with greater confidence. While MCP focuses on providing a universal protocol for connecting AI to data, platforms like Atlas demonstrate how domain-specific solutions can layer deep regulatory intelligence and compliance workflows on top.

How MCP Dismantles Data Silos

By giving AI a unified access layer, MCP effectively breaks down research silos. Organizations can wrap each data source (ELN, LIMS, clinical database, publication repository, etc.) in an MCP server, and then AI agents see them all through the same lens. The benefits include:

- Unified Data Access: AI models can query multiple data repositories through one interface. For example, GenomOncology’s open-source BioMCP lets an AI assistant search cancer trial records, genomics datasets, and medical literature all at once. Likewise, an MCP server for the Open Targets Platform exposes rich gene–disease and drug–target data. Instead of manually linking these sources, an AI agent can ask a single question and get integrated answers drawn from each dataset.

- Cross-Department Collaboration: When silos speak the same language, teams share insights effortlessly. An AI agent with MCP access can pull data from lab notebooks, quality management systems, and manufacturing records in one flow. For example, a model could do real-time “quality checks” by comparing experimental results against regulatory standards, since MCP can connect both R&D and compliance systems. In practice, this means no department has to hand off reports manually – everyone works from the same up-to-date data.

- Streamlined Workflows: MCP automates many routine tasks that used to be manual. What once took days of cross-department handoffs can happen in seconds. In one pilot, an Open Targets MCP server was integrated into a discovery bot so it could fetch relevant target biology data automatically during analysis. Well-designed MCP servers act like plug-and-play modules: once you publish an interface, any AI agent can immediately call it. This accelerates tasks like data gathering, hypothesis testing, and report generation.

Figure: An AI assistant (Claude) using an Open Targets MCP server. The user asks a biomedical question, the AI calls multiple MCP-exposed functions behind the scenes, and then returns a concise answer with data from the Open Targets knowledge base.

- Enhanced Data Governance: MCP makes data access explicit and auditable. Every function an AI agent can call is defined up front, and each call can be logged. In practice, this means AI-driven actions are as traceable as any enterprise transaction. Companies should treat MCP endpoints like “systems of record,” with version control, access reviews, and audit logs. For example, an MCP server can tag each tool or dataset by sensitivity (PHI, proprietary research, etc.) and enforce appropriate access controls. In short, MCP forces AI access to go through well-defined gates.

In summary, MCP lets AI models bridge formerly isolated systems. Rather than piecing together data by hand, researchers can rely on an AI assistant that sees all approved data sources as one unified knowledge base. This accelerates cross-functional projects, speeds up decision-making, and ensures everyone works from the same, up-to-date information.

Real-World MCP Applications in Life Sciences

Several pioneering projects show how MCP is already reshaping life science workflows:

- BioMCP (GenomOncology): In April 2025, GenomOncology released BioMCP, the first open-source MCP toolkit for biomedical data. BioMCP uses the Anthropic standard to connect AI to specialized medical databases. It supports “advanced searching and full-text retrieval” from oncology clinical trial registries, genomic datasets, and research publications. Crucially, the AI using BioMCP can refine queries conversationally: as GenomOncology’s CTO explains, the agent can start with a broad disease question and then delve into related trials and genetic factors, with the system “remembering everything” previously discussed.

- Open Targets Platform: The Open Targets project provides curated data on gene–disease associations and drug targets. In mid-2025, a community engineer built an MCP server for this platform. This wraps the Open Targets API into dozens of callable functions. In one demonstration, a researcher simply plugged this MCP connector into a chat interface, asked about a disease like schizophrenia, and received a structured answer listing the top associated genes. This showed that even non-technical users can harness complex genomics data via MCP without writing code.

These examples highlight that MCP isn’t just a theory – it’s already delivering real insights. By turning specialized databases into AI-readable tools, projects like BioMCP and the Open Targets MCP server are giving scientists conversational access to data that used to require expert queries. As more datasets are exposed via MCP, AI tools will grow smarter and more reliable, because their answers come directly from authoritative sources.

Adoption and Ecosystem Growth

The MCP ecosystem has expanded rapidly in 2025, with clear indicators of mainstream adoption:

- Explosive Server Growth: By mid-2025, thousands of MCP servers were online. Anthropic’s official directory listed over 5,800 servers by June (up from roughly 100 at the end of 2024. These include community-built connectors and official vendor services, reflecting global interest in the standard.

- Major Industry Support: All the big AI and cloud companies now back MCP. For example, OpenAI added MCP to ChatGPT, Google integrated it into its Gemini AI, and Microsoft built it into Copilot Studio. Even cloud infrastructure vendors offer hosted MCP endpoints. In short, MCP is becoming a standard feature in AI platforms, which means life sciences firms can adopt it knowing the tech ecosystem is ready.

- Life Science Readiness: The life sciences IT ecosystem is already preparing for MCP adoption. Centralized data platforms, ELN/LIMS providers, and cloud database vendors are beginning to introduce MCP-compatible features. These platforms are designed to consolidate siloed data into unified environments, which is exactly what MCP needs to operate effectively. Once data is centralized, an MCP layer makes it instantly available to AI-driven applications. Vendors across the sector are moving in this direction: electronic lab notebook (ELN) tools are starting to expose MCP endpoints, cloud providers are experimenting with native connectors, and compliance software is being adapted to support MCP integration. This signals a growing awareness in the industry that standardized, AI-ready access to research data will soon be a competitive necessity rather than a nice-to-have.

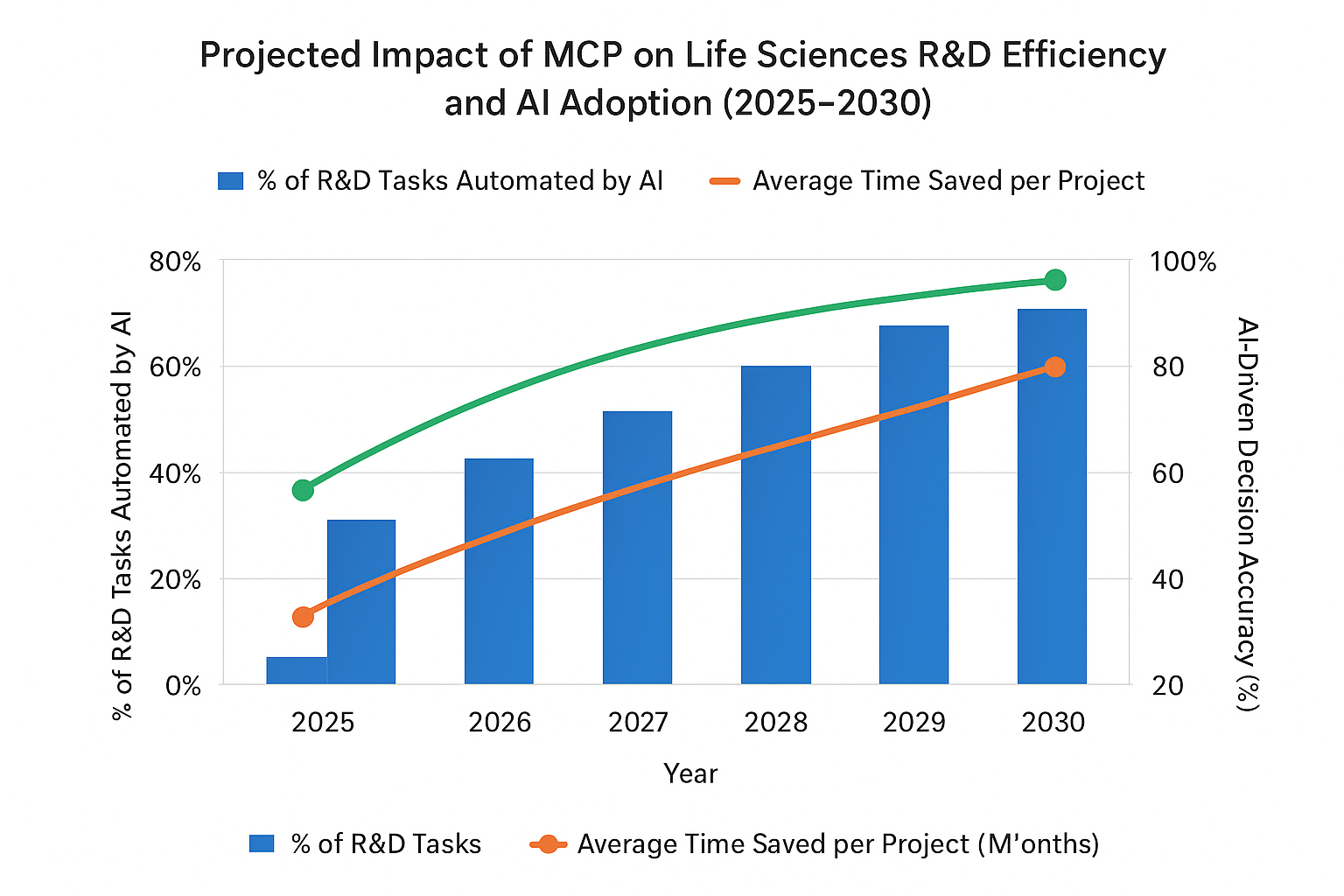

- Market Outlook: Analysts estimate that AI could unlock on the order of $350–$410 billion in value for pharmaceutical R&D by 2025. MCP will be a key enabler of that value because it allows AI to reach all relevant data. With thousands of connectors live and top vendors on board, MCP looks poised to become the default enterprise data layer for AI in life sciences. In short, every indicator points to a booming ecosystem that life sciences organizations can plug into.

In summary, MCP adoption is no longer hypothetical. Thousands of servers exist, major vendors support it, and life sciences IT platforms are aligning to leverage it. Companies that adopt MCP now will have a head start in deploying AI across their data assets.

Future Impact on Innovation and AI

Looking ahead, MCP’s impact on life sciences R&D promises to be profound:

- Faster Drug Discovery: By letting AI agents synthesize chemistry, biology, and clinical data instantly, MCP can dramatically shorten R&D cycles. For example, an AI design tool might propose a new molecule and immediately consult toxicity databases, pathway models, and patient data (all via MCP) before iterating. This real-time feedback loop means researchers can test and refine candidates much faster. In effect, AI agents could “know” the latest lab results and literature when evaluating each idea, helping teams catch promising leads earlier.

- Agentic AI in the Lab: MCP lays the groundwork for autonomous AI workflows. Imagine telling an AI: “Analyze last week’s experiments and suggest next steps.” The AI would use MCP to retrieve raw data from LIMS, run analytics, and report back. It could even schedule experiments or order reagents by interacting with inventory systems via MCP. In other words, MCP turns AI into a practical lab assistant, letting it drive complex processes with minimal human handholding.

- Advanced AI & Compliance: MCP also enables advanced, multi-modal AI. Future models could blend text, image, and structured data by calling MCP servers for each modality. For example, a pathology AI could fetch relevant microscopy images and genetic profiles on the fly. Importantly, healthcare-specific MCP extensions (sometimes called “HMCP”) are being developed with compliance built in: they use secure authentication, encryption, and audit logging to meet HIPAA and GxP standards. This means powerful AI-driven analysis can be done in a controlled, auditable way.

- Strategic Advantage: Finally, early adopters will gain a significant edge. Using MCP to integrate data feeds means faster innovation and more agile decision-making. As one industry analysis notes, MCP “unlocks automation at a level never before possible”. In a data-rich field like pharmaceuticals, being able to leverage every piece of information via AI is a clear competitive differentiator. Firms that build MCP pipelines now will be well-positioned to lead the coming AI-driven wave of discovery.

Overall, MCP is more than a technical protocol: it is a strategic enabler for next-generation R&D. By unlocking connected data, it will allow scientists to develop medicines more efficiently and intelligently than ever before.

Conclusion: MCP on the Strategic Roadmap

Data silos have long held back life sciences innovation. The Model Context Protocol offers a powerful solution by creating a common integration layer across those silos. By standardizing how AI models access data and tools, MCP makes real-time insights and automated workflows possible. Early projects like BioMCP and the Open Targets MCP server already show how this approach delivers tangible benefits.

For pharma and biotech leaders, the message is clear: MCP should be on your strategic roadmap. It aligns with digital transformation goals (FAIR data, cloud analytics, AI/ML pipelines) and directly addresses R&D bottlenecks. Start with small pilots – for example, expose a key internal dataset via MCP and hook it into an AI assistant – to demonstrate quick wins. Those early successes will build momentum and clarify how MCP can scale across your organization.

With the industry investing heavily in AI (analysts forecast hundreds of billions in annual value from pharma AI), ignoring protocols like MCP would be a missed opportunity. Thousands of MCP connectors and servers already exist, and major AI platforms support the standard out of the box. In short, MCP isn’t just an IT detail – it’s a foundation for the future of pharma R&D. Companies that embrace it now will break down silos, transform collaboration, and fully harness the power of AI-driven innovation.

Frequently Asked Questions (FAQ)

Q1- What exactly is MCP, and why is it relevant to life sciences R&D?

A1- MCP (Model Context Protocol) is an open standard that lets AI models dynamically connect to external data sources and software. In life sciences, so much R&D data lives in ELNs, LIMS, and databases. MCP turns those systems into AI-accessible tools, meaning an assistant can query them directly rather than guessing. Essentially, it provides a universal API for AI: models can retrieve live laboratory and clinical data through MCP instead of relying on outdated or fragmented information.

Q2- How do we start adopting MCP in our organization?

A2- Begin with a pilot. Identify a high-value dataset or system (for example, a compound registry or patient database) and wrap it with an MCP server. Anthropic’s GitHub provides the MCP specification and SDKs for popular languages. Many AI platforms (like ChatGPT, Claude, etc.) already allow adding custom MCP connectors. In practice, you might expose just one data source via MCP and then test the workflow by asking an AI a question that requires that data. This helps validate the integration and familiarizes your team with the protocol.

Q3- What are the main benefits of using MCP?

A3- The primary benefit is unified data access. An MCP-enabled AI can retrieve data from multiple silos in one query, saving time and reducing errors. This leads to faster insights and fewer duplicated efforts. MCP also improves collaboration: when all teams expose data via MCP, everyone’s AI tools draw from the same up-to-date knowledge base. In practice, companies see quicker analyses (AI can compile reports in minutes) and more informed decisions because the AI is using the best available data.

Q4- What are the challenges or risks of MCP?

A4- The main challenge is governance. MCP lets AI act on your systems, so you must secure and monitor those actions. The biggest risks are unauthorized access and AI “hallucinations” from bad inputs. Mitigation steps include strong authentication for each MCP call, vetting and versioning each tool, and logging every AI query. In regulated environments, you should also audit AI-driven decisions just as you audit any critical process. Design each MCP interface with security in mind and treat MCP endpoints as you would any sensitive application.

Q5- How does MCP fit with our existing data infrastructure ?

A5- MCP is designed to complement what you already have. You keep your databases, LIMS, ELNs, and analytics tools in place; you simply add MCP interfaces on top. For example, a centralized data platform (such as one built by Atlas Systems) can consolidate siloed data. If that platform exposes an MCP endpoint, then any AI agent can query it directly. In short, MCP provides an AI-friendly layer on your existing infrastructure. It doesn’t replace systems – it just makes them accessible to AI in a standard way.

Q6- What ROI can we expect from implementing MCP?

A6- ROI comes primarily from efficiency gains and better outcomes. Measure how much time MCP-enabled AI saves on routine tasks (like gathering data or generating reports) and how project timelines shorten. For example, if MCP-driven AI eliminates one redundant experiment, that could save months of work and millions of dollars. Analysts estimate that AI could unlock ~$350–$410 billion in pharma value by 2025; MCP ensures that your organization can capture more of that by using its data effectively. In practice, ROI might look like shorter development cycles, fewer late-stage failures, and more breakthroughs.

Q7- Is MCP secure and compliant with regulations?

A7- MCP itself is just a protocol, so security depends on implementation. Use strong authentication (OAuth2, API keys) and encrypt all data in transit. In sensitive settings (PHI, GxP data), design your MCP deployment for compliance from the start. For example, a reference healthcare-MCP implementation used encrypted OAuth2 tokens, TLS, tenant isolation, and detailed audit logs to meet HIPAA/GxP requirements. In short, treat each MCP server like any critical application: apply the same security standards (access controls, monitoring, validation) that you would for your databases or cloud services.

Q8- How do we measure success with MCP?

A8- Start with clear goals. Metrics might include time saved (e.g., reduction in hours to compile reports or find data), throughput (more experiments or queries completed), and model accuracy (AI answers grounded in authoritative data). You can compare key workflows before vs. after MCP to see speed-ups, or track adoption (how many teams use MCP-enabled tools). Survey users: do they get answers faster or need fewer follow-ups? Ultimately, success is faster insights and better decisions. When scientists spend less time wrangling data and more time innovating, you’ll know MCP is delivering value.

Q9- Where can we find MCP resources or partners?

A9- The MCP ecosystem is expanding quickly. Anthropic’s GitHub repo has the official protocol spec and SDKs. Community directories like the Glama MCP catalog and PulseMCP list thousands of available servers (including general-purpose and domain-specific connectors). In life sciences, look at open-source projects on GitHub – for example, GenomOncology’s BioMCP and the Open Targets MCP connectors.