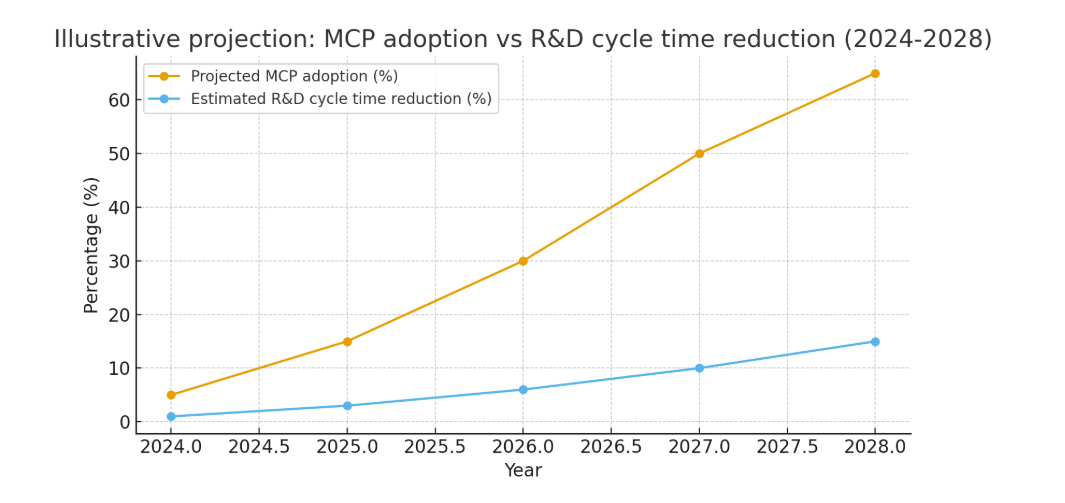

tl;dr: Yes, MCP (Model Context Protocol) can enable near real-time decision-making across global R&D hubs without necessarily compromising compliance, but only if organizations adopt secure MCP architectures, strong governance (data lineage, access control, validation), and a risk-based regulatory strategy aligned with current agency expectations. MCP is a connector layer that makes AI agents context-aware and tool-enabled; used correctly, it reduces manual handoffs, preserves reproducibility, and speeds decisions. However, real risks remain (supply-chain security, misconfigured servers, model drift, and audit evidence), and these must be managed through technical controls, validation, and documented lifecycle practices. Refer to the illustrative projection chart I included for a visualization of potential MCP adoption and R&D impact (illustrative only).

Can MCP enable real-time decision-making across global R&D hubs without compromising compliance?

Pharma and life-science R&D increasingly operate as distributed, global systems. Teams in discovery, formulation, process development, clinical operations, and manufacturing often work from multiple hubs across continents. Leaders want faster, confident decisions, for example, whether an assay run is acceptable, whether a batch can be released, or whether a clinical signal requires rapid action. At the same time, regulators insist on reproducibility, traceability, and documented controls. The Model Context Protocol (MCP) is a technical standard that promises to connect AI agents to data sources and tools in a unified way. This article explains how MCP can support real-time decision-making, the compliance opportunities it unlocks, and the technical and governance controls needed to avoid regulatory and security pitfalls.

What is MCP — in plain language?

Simply put, the Model Context Protocol is an open standard that lets AI assistants and agent systems talk to your internal data stores and tools in a secure, pluggable way. Think of MCP as a standardized API and permissioning model that provides context (files, databases, compute tasks, tools) to a model, and allows the model to call specific actions. Because it is standardized, teams don’t need to write bespoke connectors for every model-to-service link. That makes integrations faster, more repeatable, and easier to audit.

Why does MCP matter for global R&D decision speed?

- Single integration point. Instead of dozens of bespoke integrations, an MCP server exposes verified datasets and tools to multiple AI clients. That reduces integration time and reduces the chance of an undocumented data flow, a classic compliance blind spot.

- Context preservation. MCP can deliver context (metadata, audit trail, schema info) alongside data. When an AI agent proposes a decision, it can also return the context used to make that decision, who accessed what, which baseline data, and which code or model version. This improves reproducibility.

- Automation of routine checks. Agents connected through MCP can run prebuilt validation checks (e.g., outlier detectors, stability flags, GMP checklist) before presenting a decision, shaving hours from manual review cycles.

- Localized control + centralized policy. An MCP architecture can enforce local access rules while exposing a common policy layer, enabling faster local decisions without losing central governance.

These features, taken together, translate directly into speed: fewer manual handoffs, fewer email attachments, and faster aggregation of evidence required for decisions.

Compliance is not optional, what regulators require now.

Regulators are actively updating guidance to address AI used in regulatory decisions. The U.S. FDA, for example, has issued draft guidance describing a risk-based credibility assessment for AI systems used in regulatory decision-making. The guidance emphasizes transparency, documented context of use, model maintenance, and lifecycle management. In short, any AI-assisted decision presented to regulators must be explainable, supported by evidence, and validated for its context of use.

Therefore, MCP adoption must be paired with documented governance that maps directly to these regulatory expectations.

How MCP helps meet regulatory expectations (concrete ways)

- Traceable data lineage: Because MCP brokers the connection, it can attach metadata and digital provenance to every data fetch — timestamps, dataset version, user identity, and checksums — which support audit trails required by good documentation practices and electronic records standards (e.g., 21 CFR Part 11).

- Reproducibility of AI outputs: If the MCP call includes the exact dataset snapshot and tool versions, a reviewer can rerun the same operations and reproduce the result. That is crucial for regulatory submissions or inspection evidence.

- Controlled exposure of sensitive data: MCP servers can enforce scope-limited access (only the fields or queries needed) so that PHI or CCI is not unnecessarily exposed to models or agents. This is essential for HIPAA and for data minimization.

- Centralized policy enforcement: Security and compliance controls (encryption, key rotation, vetting of MCP clients) can be applied centrally to MCP endpoints, simplifying compliance oversight.

Risks and real incidents, what to watch for

No technology is risk-free. A recent security finding showed that a malicious MCP server variant was used to exfiltrate emails by silently BCCing traffic, illustrating real supply-chain and permissioning risks if MCP components are not properly vetted. Any MCP architecture that grants broad permissions to external components can become a high-impact attack surface.

Other risks include:

- Misconfigured permissions: Overbroad access could expose drafts, IP, or PHI.

- Model drift and validation gaps: Models change over time; decisions they generate must be monitored for performance degradation.

- Weak audit artifacts: If an MCP implementation does not record sufficient contextual metadata, reproducibility and auditability fail.

- Operational silos: If local hubs bypass central MCP policy, inconsistent practices proliferate.

Technical blueprint for compliant, real-time MCP use in pharma R&D

Below is a practical blueprint that R&D and QA teams can start with.

1) Secure MCP server architecture

- Host MCP servers within the organization’s controlled cloud or an accredited private environment.

- Apply strong authentication (mutual TLS) and zero-trust access controls for MCP clients.

- Implement a “least privilege” model for data queries; require explicit schema agreements for any exposed dataset.

- Use software supply-chain hygiene: pin MCP server versions, scan packages, and enforce code signing. (The ITPro incident shows why this matters.)

2) Data governance and provenance

- Require dataset snapshots for decision-support actions. Each MCP call that influences a decision should return a dataset ID, checksum, extraction query, and timestamp.

- Store reproducibility bundles (inputs + model version + toolchain) in an immutable archive for inspection. This supports 21 CFR Part 11 style requirements for electronic records.

3) Model validation and lifecycle

- Validate AI agents for each intended context of use (COU) using a risk-based credibility framework, similar to what regulators now recommend. Validation should include performance metrics, failure modes, and statistical evidence.

- Monitor models in production and set alerting thresholds for drift. Keep retraining and redeployment controlled through change control and documentation.

4) Human-in-the-loop (HITL) design patterns

- For high-risk decisions (e.g., batch release, safety signal), a human sign-off with the agent’s rationale and evidence bundle attached. For lower-risk triage tasks, the agent may perform automated actions following a documented SOP and audit trail.

- Maintain ergonomics: UI should present the decision, supporting data, uncertainty level, and recommended next steps.

5) Auditability and regulatory packaging

- Create “decision packages” automatically: these include the MCP provenance log, dataset snapshot, code versions, model validation summary, and human reviewer signature. Decision packages shorten inspection cycles and support submissions.

Operational benefits, measured outcomes

When implemented correctly, MCP-enabled workflows can deliver measurable gains:

- Faster cycle times: Less time is spent aggregating data across hubs; decisions that previously took days can be compressed to hours. (Illustrative projection chart above shows hypothetical adoption vs estimated R&D cycle time reduction.)

- Higher reproducibility: Standardized connectors improve the ability to reproduce analytic runs.

- Reduced manual error: Automating routine checks reduces transcription and interpretation errors.

- Scalable documentation: Automatic generation of audit artifacts reduces manual documentation effort and improves inspection readiness.

For context, global R&D trends show continuing pressure to increase R&D efficiency and productivity. Industry reports tracking R&D investment and productivity emphasize the need for technical enablers that reduce time-to-decision and increase throughput, exactly the use cases MCP targets.

Practical scenario, an end-to-end example

Imagine a global biologics company with formulation teams in Boston, analytical labs in Shanghai, and manufacturing in Ireland. A stability assay run flags an impurity spike. Here is how an MCP-enabled flow would work:

- The lab system posts the run results to an MCP-exposed dataset snapshot.

- An AI agent queries the MCP server for recent runs, historical baselines, and process parameters. MCP returns the exact dataset snapshot, plus metadata and the agent’s compute result.

- The agent runs root-cause checks (statistical control charts, correlation with raw material batches). It packages the evidence and makes a recommendation: “Hold the batch pending confirmatory test.”

- The system automatically creates a decision package (dataset IDs, checksums, model version, and suggested actions). A QA reviewer in Ireland receives the package, sees the evidence, and signs off. The sign-off is recorded with the package and archived.

- If regulators later inspect, the company can reproduce the agent’s reasoning by reloading the same dataset snapshot and model code.

This flow preserves speed (near real-time insight) and compliance (audit package, human sign-off, reproducibility).

Governance checklist for leaders (quick, actionable)

- Map the MCP endpoints and the inventory data exposed.

- Classify datasets by sensitivity and apply data minimization.

- Mandate provenance metadata for every MCP call.

- Define COUs and validate models per COU.

- Enforce software supply chain controls for the MCP server and clients.

- Build automated decision packages and retention policies.

- Train staff in HITL oversight and incident response.

- Pilot in a controlled area (e.g., non-GMP analytics) before extending to GMP decision points.

Policy alignment and regulator signals

Regulators are not banning AI; instead, they are setting expectations for credible, transparent AI use. The FDA’s draft guidance frames a risk-based credibility framework and highlights the need for documentation and lifecycle management for models used in regulatory decisions. Firms implementing MCP must design their validation and documentation to meet those expectations.

At the same time, policy signals (e.g., moves toward New Approach Methodologies and increased AI acceptance in nonclinical evidence) show regulators value reliable, reproducible evidence streams that speed safe decisions. This regulatory context opens a window for MCP adoption, but only if compliance steps are not an afterthought.

Security and supply-chain hardening technical controls you must deploy

- SBOM & signed releases: Keep a Software Bill of Materials (SBOM) for all MCP components; require code signing.

- Runtime isolation: Run MCP servers in segmented networks with strict egress rules.

- Access monitoring: Log every MCP request and regularly review privileges.

- Vulnerability scanning & patching: Regularly scan MCP server containers and dependencies. The ITPro incident showed how malicious package versions can introduce exfiltration risks.

Limits and realistic expectations

MCP is an enabler, not a magic bullet. Real-time decisions still depend on:

- The quality and timeliness of the underlying data.

- The maturity of models and their validation evidence.

- Organizational willingness to redesign processes and invest in governance.

Also, not all decisions should be fully automated; high-risk regulatory decisions should retain human oversight. The best path is a phased approach: pilot, validate, bake governance, scale.

Roadmap for piloting MCP in your organization (6–9 months)

- Month 0–1: Executive alignment, risk assessment, data inventory.

- Month 2–3: Build a sandbox MCP server exposing non-production datasets; implement auth and logging.

- Month 3–5: Develop a small agent that performs a low-risk task (e.g., QC trend analysis) and produces decision packages.

- Month 5–7: Validate the agent’s COU, run shadow trials alongside the existing process, measure time saved, and reproducibility.

- Month 7–9: Harden security, finalize SOPs, and expand to higher-value COUs with HITL controls.

Economic and strategic considerations

Adoption of MCP and agent-enabled decision workflows will not be universal overnight. The business case typically rests on a combination of reduced cycle time, reduced manual review costs, and better scalability of subject matter expertise. Industry trend reports show continuous pressure to improve R&D productivity and faster go/no-go decisions; technical enablers such as MCP help deliver on that strategic imperative.

Closing summary

MCP is a practical, standards-based connector that can help life-science organizations realize real-time, evidence-backed decisions across global R&D hubs. The upside is clear: faster, reproducible decisions and more scalable documentation for regulators. The downside is equally clear: supply-chain, permissioning, and model lifecycle risks that can erode compliance if ignored. The right approach is to pair MCP deployment with strong security, provenance, model validation, and human oversight, then scale from low-risk pilots to mission-critical COUs.

FAQs

1. What is MCP, and how does it support real-time decision-making in pharma R&D?

MCP (Model Context Protocol) is a standard that securely connects AI systems to data sources and tools. It enables faster decisions by giving AI agents verified access to R&D data with full context, reducing manual handoffs and delays.

2. How does MCP ensure compliance while accelerating R&D workflows?

MCP enforces data lineage, access control, audit trails, and reproducibility. Every dataset call and model action is logged, creating evidence packages that meet regulatory requirements like FDA 21 CFR Part 11.

3. What risks come with using MCP for regulated decision-making?

Risks include supply-chain attacks on MCP servers, misconfigured permissions, model drift, and weak audit documentation. These risks must be mitigated with strong governance, validation, and human oversight.

4. Can MCP replace human reviewers in regulatory decision-making?

No. MCP can automate routine checks and aggregate evidence, but human-in-the-loop oversight is still required for high-risk regulatory decisions such as batch release or clinical safety assessments.

5. Where should pharma companies start with MCP adoption?

The best approach is to pilot MCP in low-risk, high-value areas (e.g., QC trend monitoring, non-GMP analytics). Once validated, expand to critical GMP and regulatory decision points with proper SOPs and compliance controls.